Ужин в честь Дня святого Валентина дома — идеальная идея для вечера 14 февраля. Конечно, каждый день – хороший день, чтобы проявить любовь, но ужин в День святого Валентина – это особая возможность приготовить что-нибудь вкусненькое и провести время вместе. Узнайте 7 основных элементов успешного домашнего ужина в честь Дня святого Валентина! Ужин ко Дню […]

День Святого Валентина — это особенный день, когда мы отмечаем любовь и признание своим близким. И хотя этот праздник может быть весьма коммерческим, он также предоставляет нам возможность проявить свою творческую натуру и создать романтическую атмосферу в нашем доме. В этой статье мы рассмотрим традиционные и современные подходы к декорированию интерьера ко дню Святого Валентина. […]

Трендовые цвета

Светлый интерьер

Темный интерьер

Еще больше идей

Дом — это не просто место, где мы живем, это наше убежище, наша личная территория, где мы проводим большую

Цвет спальни: бежевая спальня – универсальный вариант? Бежевые краски для стен, мебель, текстиль, украшения

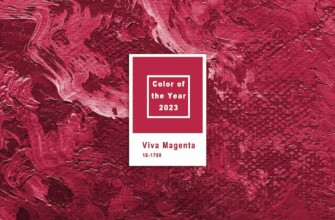

В 2023 году самым популярным цветом по версии института Pantone будет Viva Magenta, т.е. темно-красный

Модные в 2023 году цвета стен – это разнообразная палитра, в которой вы найдете как приглушенные оттенки

Покупка нового ковра часто может являться крупной инвестицией и выглядеть не совсем бюджетно.

Компания PPG уже выбрала свой цвет 2023 года. Оттенок Vining Ivy на границе синего и зеленого напоминает

Цвета земли в интерьерах используются уже на протяжении нескольких лет подряд. Все благодаря тому, что

Цвет стен на кухне обычно подбирают таким образом, чтобы он сочетался с мебелью и другими элементами